by Rudolf Jaksa and Peter Sincák

The Computational Intelligence Group at TU Kosice is doing research in application of CI tools in image processing, prediction problems and in mobile robot control. Considering mobile robotics, recent work is focused on reinforcement learning methods and their application on LEGO Mindstroms mobile robots.

Mobile robotics is typical area for application of advanced intelligent control methods including artifical neural networks (ANNs), evolutionary strategies, fuzzy systems, etc. A common problem in this area is the navigation of robot, like following the desired trajectory, searching the path to the target position, wall following or obstacle avoidance. In practical applications these tasks represent problems like automatic parking and driving of a car, driving flexible automated guided vehicles without a prescribed path or control of autonomous robots without direct manual supervision. Nowadays are popular so called Internet robots, although they operate in a very different environment than classical mobile robots, they face very similar navigation problems and can use techniques developed for a mobile robot navigation. It can be claimed that ANNs are one of the key technologies in a mobile robotics area.

Most advanced methods in neurocontrol are based on reinforcement learning techniques, sometimes called also approximate dynamic programming. These methods are able to control processes such a way, that is approximately optimal with respect to any given criteria. For instance, when searching for an optimal trajectory to the target position, distance of the robot from this target position can be simply used as a criterial function. The algorithm will compute proper steering and acceleration signals for control of vehicle and the resulting trajectory of vehicle will be close to optimal. During few trials (the number depends on the problem and the algorithm used) will the system improve performance and resulting trajectory will be close to optimal. The freedom of choice of criterial function and the ability to derive a control strategy only from trial/error experience are very strong advantages of this method.

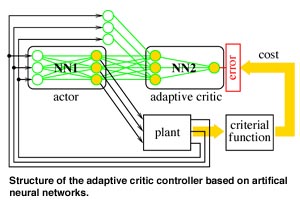

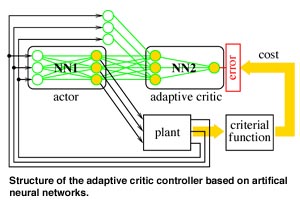

There are number of various reinforcement learning modifications. Common class of these algorithms is based on the adaptive critic (AC) approach. In this approach they are two main components, one is an adaptive critic, which evaluate behavior of whole control, and second element is so called actor, which is adapted in order to optimize the evaluation produced by the adaptive critic. Both elements can be realized using ANNs. Our research is focused in the study of the role of actor and adaptive critic networks complexity and their influence to the control performance. The figure shows the structure of AC controller based on ANNs.

Our recent experiments were done on software simulator of the mobile robot. This simulator was used also in experiments with supervised neurocontrol and fuzzy control of mobile robot. Beside the simulation, the control of a real LEGO robot was accomplished. Our first goal was achieved recently and we can repeat experiments with a sufficient accuracy in simulation environment and using a real robot. Generally we can confirm that the reinforcement learning based neurocontrollers are progressive part of control technology and we do believe that they will play important role in intelligent technologies of 21-st century.

Links:

CIG homepage: http://neuron-ai.tuke.sk/cig

Please contact:

Rudolf Jaksa - TU Kosice

Tel: +421 95 602 5101

E-mail: jaksa@neuron-ai.tuke.sk