|

|

ERCIM News No.46, July 2001 [contents]

|

by Eric Pauwels

In the EU-funded FOUNDIT project researchers at CWI are designing search engines that can be taught what images to look for on the basis of evidence supplied by the user.

Vision is an amazingly powerful source of information about the world around us. As a consequence, a considerable part of the data we collect comes in the shape of visual material, and multimedia libraries are rapidly filling up with images and video-footage. However, while progress in electronics reduces the costs of collecting and storing all these images, an efficient database search for specific material becomes increasingly difficult. As it is virtually impossible to classify images into simple unambiguous categories, retrieving them on the basis of their visual content seems to be the only viable option. Rather than asking the computer ‘to display image AK-C-4355.jpg’, we want to instruct it to retrieve an image showing, eg, the 3-dimensional structure of a DNA-molecule. Or, imagine an interior decorator looking for visual art to enliven his client’s newly refurbished offices, requesting the system to find vividly coloured abstract paintings. These scenarios call for vision-sentient computer systems that can assist humans by sifting through vast collections of images, setting aside the potentially interesting ones for closer inspection, while discarding the large majority of irrelevant ones. Being able to do so would effectively equip the search engine with an automated pair of eyes with which it can reduce for the user the drudgery of an exhaustive search. Such systems will have to be highly adaptive. Indeed, what constitutes an ‘interesting’ or ‘relevant’ image varies widely among users, and different applications call for different criteria by which these images are to be judged.

|

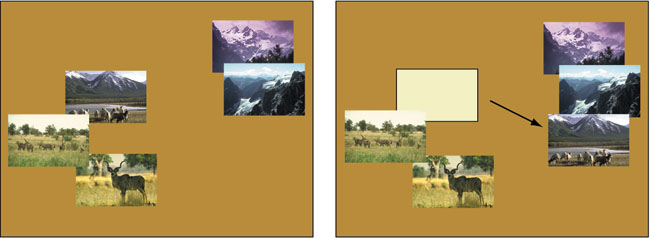

| Interactive definition of image similarity. Left: The interface displays five images grouped together to reflect its current model of image similarity. Right: If this grouping does not match the user’s appreciation of their similarity, he can rearrange them on screen into a more satisfactory configuration by a simple drag-and-drop operation. For example, if the user considers the top left image to be more reminiscent of mountains, he will drag it over to the image group on the right. In the next iteration step, the search engine will adapt its features to reproduce this user-defined similarity as faithfully as possible. |

In the FOUNDIT project search engines are designed that can be taught what images to look for on the basis of evidence supplied by the user. If the user classifies a small subset of images as similar and/or relevant, the system extracts the visual characteristics that correlate best with the userís classification, and generalises this ranking to the rest of the database. Only images marked as highly relevant are displayed for closer inspection and further relevance feedback, thus setting up an iterative cycle of fine-tuning. The interaction between user and interface is very natural and transparent: similarities between images are indicated by dragging them into piles on the screen, and relevant images are selected by simply clicking on them. Such operational transparency is of great importance for applications, such as

E-commerce.

Link:

http://www.cwi.nl/~pauwels/PNA4.1.html

Please contact:

Eric Pauwels — CWI

Tel: +31 20 592 4225

E-mail: Eric.Pauwels@cwi.nl